5 changes underway for R&D IT shifting to biologics

Back in 2000, the FDA approved only six biologics versus 27 new molecular entities (NMEs). Fast forward twenty plus years, and by the end of 2022 the number of biologic approvals outpaced NMEs, signaling the ascent of biologics after decades of research.

The shift to biologics brings many changes, most importantly improved patient outcomes and better coverage for rare diseases. The new modalities that have emerged in the last 20 years, including cell and gene therapies and RNA drugs also upend the way work is done in the lab. Bringing biologics from the lab to patients requires more data, more complex data, and greater need for collaboration across specializations, requiring R&D IT to evolve the systems and processes of the lab.

This shift has been challenging for scientists as well. "Scientists have gone from working on bicycles to jet planes, but they’re still using the same toolkit," describes Sajith Wickramasekara, Benchling CEO and co-founder.

In this article, we examine the inflection point with biologics, how legacy tools built for small molecules impact productivity and collaboration, and share five reasons for optimism when it comes to IT for biologics.

"So many systems, I can’t even count."

The Executive Director of R&D IT at a leading pharmaceutical paints a picture of the tech clash between biologics and small molecules:

"The new atmosphere feels like the Wild West. How many systems? So many I can’t even count. We started out as a small molecule company, and so our tools were built around that. Now, our scientists’ work is no longer contained in neat little boxes – it’s messy, we’re crossing borders, mixing small molecules with antibodies, mRNA and gene therapy.

We needed to adapt. So we have our own Frankenstein-type registration tools for entity registration. It’s working, but it’s not pretty. Not only can you not see the full molecule, but scientists are putting data across different locations, it's not all in one place. Data is being shared in slides, it's getting lost. We don’t have the tools that allow scientists to investigate the space as much as they should."

How did we arrive here?

Throughout most of the 20th century, pharmaceutical R&D focused on medicines constructed from small molecules designed to control diseases. Small molecules are generally easier to produce and more stable. In addition, the process of discovering small molecules is fairly uniform across therapeutic applications. In contrast, biologics, which are derived or manufactured from a living source and work by targeting specific chemicals or cells involved in the body’s immune system, seek to cure, not control or treat, disease. Biologics commonly include larger proteins, peptides, nucleic acids, or cells — and the details surrounding biologics are much more complex than with small molecules.

Take identification as an example. A small molecule drug typically has a well-defined chemical structure, and this alone can be the basis for novelty checking and registration. But with a biologic entity, the structure will be much larger and potentially less well defined, making consistent depiction more complex. This is also due to nucleic acid composition or the presence of chemical modifications on sequences. The metadata, which includes factors such as the sample’s provenance and lineage will play a crucial role in determining whether the material is novel or is related to a previous sample and how.

Processes as complex as protein engineering or the construction of an antibody-drug conjugate overwhelm a legacy small-molecule-based informatics system. Difficulties result from the wide range of techniques and disciplines involved, the need to track metadata, and the sheer volume and variety of data in biologics research. In this environment, data harmonization is hard and collaboration is challenging.

Inflection point in biopharma

In the 1990s, the former CEO of Intel Corporation popularized the concept of the strategic inflection point to describe any major change in the competitive environment that requires a fundamental change in a business’ strategy. He warned, a strategic inflection point could either be an opportunity to reach further business growth or the start of a fall. Proactive and timely adaptation is more likely to result in a positive outcome. Reluctance to change or a failure to recognize the inflection point often leads to an irreversible business decline.

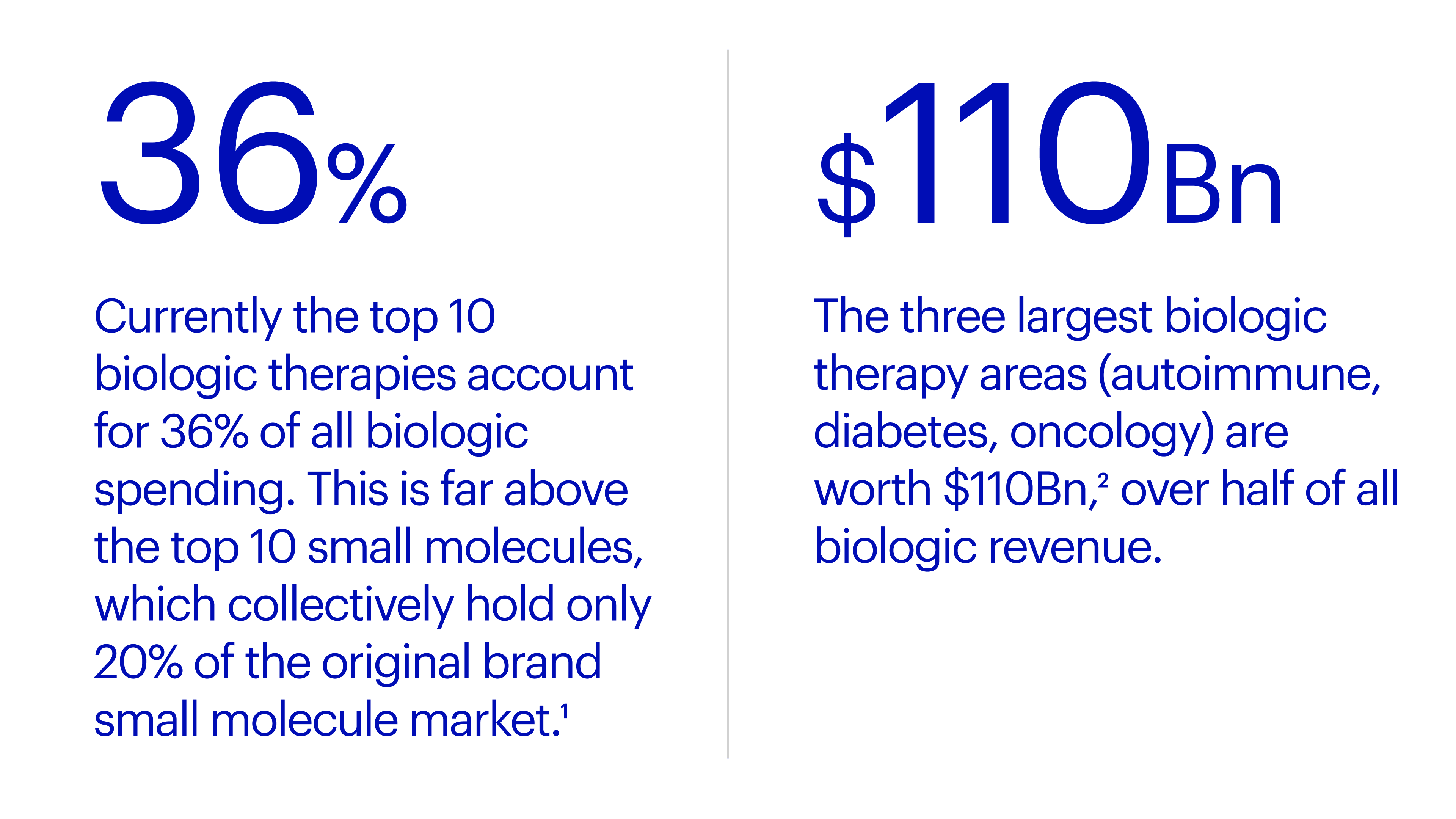

The shift to biologics is indeed a strategic inflection point for biopharma:

"As emerging technologies advance through development pipelines and bring new treatments for diseases, they create unprecedented opportunities for the biopharmaceutical firms that actively pursue them, and they put latecomers at a disadvantage….To date, annual sales for new modalities (excluding mRNA) have reached approximately $20 billion, boosting the overall market cap for biopharma companies by more than $300 billion." (Boston Consulting Group, March, 2023).

The industry is racing towards inventing and delivering better medicines, faster and it needs to develop a new tech strategy in parallel. IT teams and companies that can align their teams and rapidly incorporate new tech stand to be the most productive biopharma companies, driving success for decades to come.

5 changes underway for IT

This is not a quick or easy transition. How is R&D IT, in particular, managing? Here’s where we’re seeing the most progress today.

1.‘Follow the molecule’ data and tech strategy is key, and software is catching up to this need.

IT’s north star in this new environment: Break down silos, integrate the data. The objective is for teams to work in an end-to-end ecosystem, having data all together, marrying biological data to small molecule data, and harmonizing data so that scientists can see it regardless of modality or where it came from. Achieving this is how we use data to go faster, and create better, safer patient outcomes.

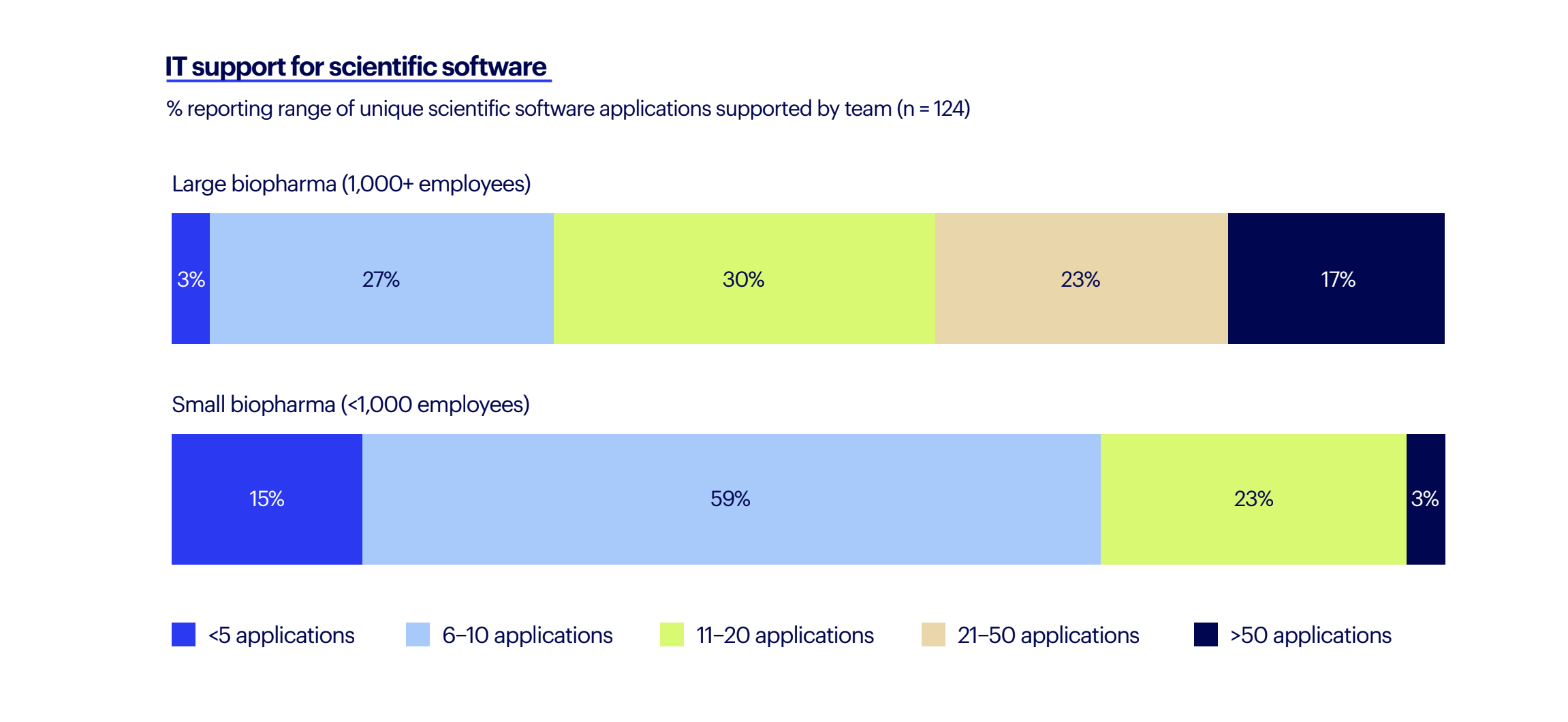

Benchling's 2023 State of Tech in Biopharma report illustrates the need: 40% of IT at large biopharma support 20+ unique scientific software applications for their teams alone as scientists work across a large amount of different scientific software applications. The situation is complicated by a high need for custom-built tools which IT manages or builds themselves. 84% of respondents, across R&D and IT, are using at least some custom-built software, with 51% of IT reporting that at least 8 out of 20 of those applications are custom-built. This is not ideal from a collaboration, security, or productivity perspective.

Teams need to manage samples and processes with seamless handoffs and complete traceability. IT sees a solution in taking an end-to-end approach to data and teams, with one platform spanning research and development and manufacturing. Moving away from siloed apps and towards a single interface, a central platform for scientists (and the IT teams supporting them) to capture and manage data across research, process development, and into manufacturing is key — essentially following the molecule.

End-to-end platforms have improved dramatically in recent years, offering flexible data models that adapt across teams, point-and-click dashboards and reports, ETL-based APIs for high throughput data, configurable schemas, and audit trails. Such enabling technology is now understood to be critical in improving R&D’s priority outcomes across speed, probability of success, scalability, quality, and cost.

2. The industry is tackling instrument connectivity — and making headway.

The challenge of automating and standardizing data capture from instruments has long plagued the industry. A majority of scientists and IT report their organization uses more than 100 lab instruments, and nearly half (47%) indicate that less than three out of five of these instruments are connected to software that supports data capture.

However, progress is underway, thanks to a movement away from proprietary data formats and vendor lock-in, and towards open industry standards and data integrations. Earlier this year, Allotrope Foundation achieved an important milestone, launching publicly available data standards for lab instruments using the Allotrope Simple Model (ASM). Recently, Benchling built on this momentum with the launch of Connect, which automates instrument data capture and management using a unique open source approach, mapping all instrument output to the ASM and making the converter codes open source and freely available on GitHub.

The impact: R&D organizations automate time-consuming, manual lab instrument data collection and consolidate the management of experimental data and metadata all on a centralized platform.

3. Security used to be a barrier to adoption of cloud-based software. But now it’s the opposite, security is a benefit.

Security, compliance, and privacy have always been priorities for biotech, but in recent years, treating data as a strategic asset has become more crucial than ever. As scientific techniques and R&D processes have become more complex with biologics, the amount of data generated has massively increased, and data has become a biotech’s lifeblood.

Biotech knows that managing cybersecurity risks appropriately today requires engineering, automation, real-time analytics, threat intelligence, significant tooling, and more. Modernizing a company’s cybersecurity architecture takes a tremendous investment and requires changes in team and culture which have been challenging for biotechs. But a shift is happening. Biotechs are now taking advantage of the economies of scale that mature cloud computing providers can offer on cybersecurity, resiliency, and disaster response. Tech cloud providers have a duty and incentive to be secure — they invest far more in security than most companies can afford to on their own, and also have an abundance of expertise. More times than not, maintaining an on-prem strategy exposes biotech to more risk because 100% of the security responsibility and resourcing is on you, the company.

The biotech industry is getting more secure outcomes by taking advantage of the economies of scale with cloud platforms. This is a win for R&D IT, helping to move away from legacy, on-prem systems that don’t offer the same level of connectivity, productivity, and of course, security.

4. Scientists move away from generic software tools, as built-for-science gains ground.

Scientists today are coming up in a computational lab. This changes their expectations. No more generic, poor UX software. No more settling for horizontal SaaS that requires significant customization for science. Scientists are demanding tools purpose-built for science, and the market is listening.

Tools that capture, manage, and analyze data — especially biological data — in a user-friendly interface that scientists actually enjoy using are now seen as foundational. 70% of scientists and IT have adopted an R&D data platform according to Benchling’s recent 2023 State of Tech in Biopharma report.

With growing demand, a robust ecosystem of cloud-based, ‘built-for-bio’ software has emerged, helping scientists to process, share, and collaborate on growing biologic data sets. Oftentimes, it’s scientists who’ve worked in the industry who are creating new tools, tools that meet the high bar of what they would use and want to make the science easier. Pluto, PipeBio, and Quartzy are examples here.

5. AI is the carrot for better biotech data practices.

R&D scientists are eager to incorporate AI/ML into their work — to make predictions and work more efficiently. To do so, they know that well-organized, structured, quality data is foundational.

As much as AI will drive transformational changes in how we discover new drugs, one lesser discussed, but equally beneficial impact of AI on biotech is that it requires the industry to prioritize strong data foundations. For IT, AI is a forcing function to operationalize the flow of data coming from experimental pipelines, and integrate previously siloed data sets end-to-end. As un-sexy as it is, companies need to build their data strategy and systems before benefitting from AI and ML. Beyond simply collecting data, doing so in a standardized way and anticipating how the data should be consumed, is key. Companies need to design their data systems for the analytics and AI and ML they aim to layer on top.

In the coming years, the importance of scientific data and AI will only grow, putting additional pressure on biopharma to abandon legacy tech and build a stronger digital foundation.

In this new normal, IT teams in biotech and biopharma will increasingly play a pivotal role in ushering in new data management strategies. With the use of cohesive data strategies and modern tech, therapeutics companies can set themselves up for success for the next two decades of discovery.

The State of Tech in Biopharma Report

Explore how biopharma is adopting AI in our report based on interviews with more than 300 biopharma R&D and IT experts.

Citations:

Powering breakthroughs for over 1,300 biotechnology companies, from startups to Fortune 500s