What if billions in AI funding led to the same number of drugs?

Drug discovery has quickly become the sexiest place to apply AI. Billions of dollars are being invested in AI-driven TechBios, and in an industry where nothing changes overnight, even large pharma are already touting AI as key to how they’re transforming their discovery engines.

But in the race for AI in drug discovery, investing so heavily in scaling one part of the system overlooks the rest. Without reimagining our R&D system to handle the new speed and scale of AI-driven discovery, we risk over-promising and under-delivering to the people who need new medicines.

The power of AI is being throttled by our R&D system

New AI models for drug discovery deserve serious attention — this is without question. Within the next 5 to 10 years, AI will fundamentally change the way drugs are designed, with the potential to produce an order of magnitude more high-quality candidates against a broad range of new diseases. In the last year alone, AI has been used for identifying novel targets in areas like cardiomyopathy, generating novel antibodies, and even designing newer modalities like optimized mRNA vaccines for influenza.

But the focus for AI can't just be on discovery. The rest of the pharma R&D system — how drugs are developed, tested, approved, and manufactured — will need to accommodate this vast increase in speed and scale.

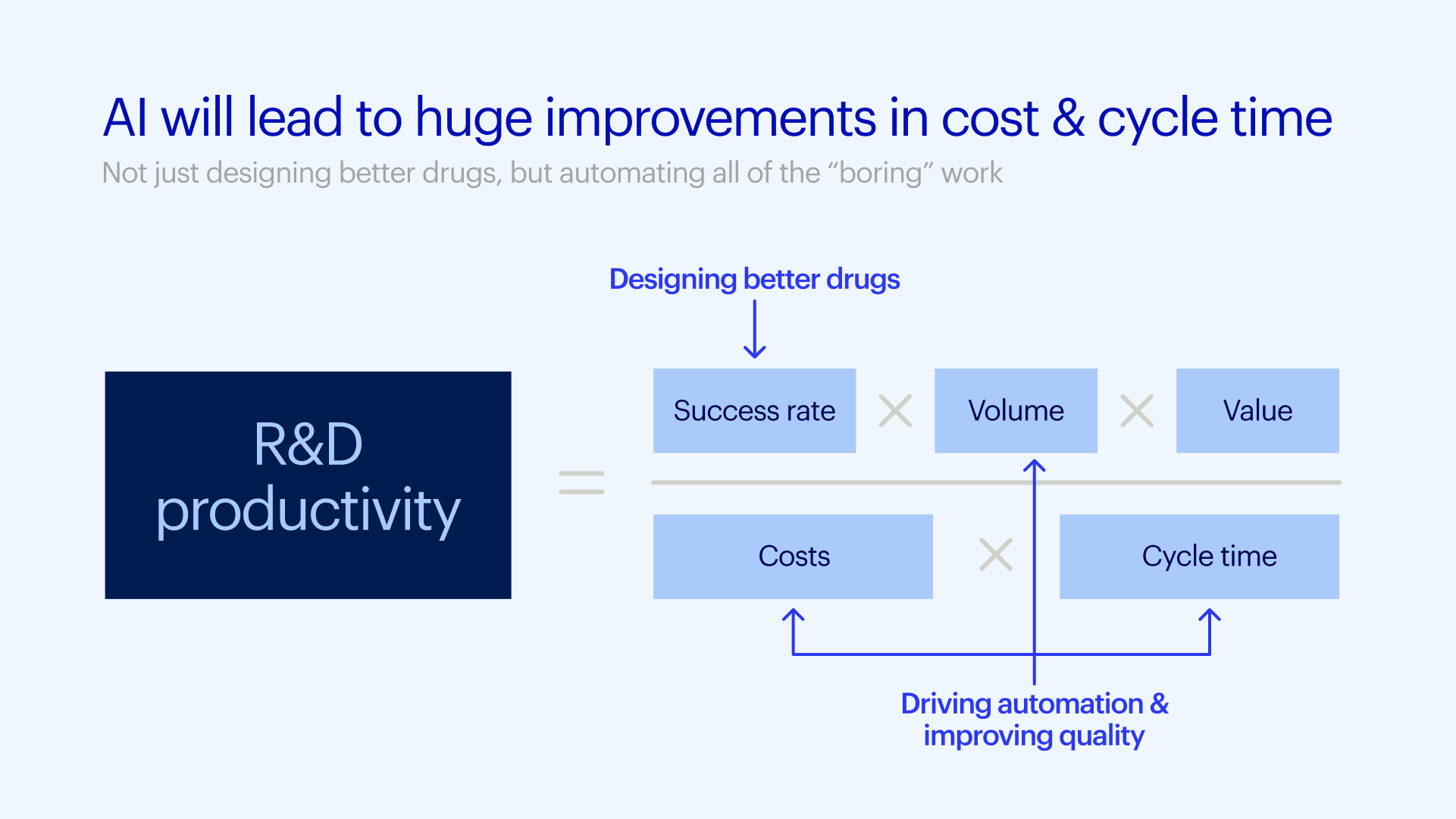

Pharma R&D has been heading in the wrong direction, becoming progressively less efficient over the last few decades. Large pharma companies now spend more than $6 billion on R&D per approved drug, compared to just $40 million (in today's dollars) in the 1950s, with approximately 85% of that spending coming after discovery. The inequality between near-zero-cost AI-driven drug discovery and skyrocketing costs for clinical trials and regulatory approval will create a bottleneck, stalling promising drugs from reaching patients unless AI is equally applied across the entire R&D lifecycle.

To be sure, the biopharma industry has long known it needs to evolve its R&D systems. The paper describing the rapid decline in R&D efficiency, often known as Eroom's Law, is more than a decade old. AI, now being used across nearly every industry as a force for disruption, bringing with it automation, scalability, and intelligence, should be used to improve every part of the R&D lifecycle, not just discovery, to increase throughput and improve efficiency.

Rethinking the R&D lifecycle with AI

Just look at how AI-driven drug discovery is already putting new and different pressures on lab-based experimentation. Drug candidates discovered with AI need to be tested through experiments in the lab, which in turn generate experimental data that is used to further refine AI models through a "lab in a loop" process. An enormous influx of new AI-generated candidates and new data-hungry AI models means labs need to run experiments at a vastly higher scale.

That can't be achieved by simply optimizing the manual processes at the bench that labs rely on today. Instead, labs need to be reinvented around complex imaging and single-cell omics assays that allow a more complete understanding of biology, along with robotic automation that enables these assays to be run at scale. Although this trend has already started, AI applications can rapidly accelerate it.

The dearth of specialized engineers needed to analyze data from complex assays and build robotic orchestration is one key bottleneck. AI models like scGPT can accelerate data analysis by automating code-intensive tasks such as reference mapping or cell annotation. AI agents will also enable scientists to set up robotic automation through just natural language, democratizing access across the industry.

Advancements in AI also have the potential to address key challenges in clinical trials. Take as an example patient recruitment, the most time-consuming part of a trial. Even though millions of people are needed to participate in clinical trials, fewer than 5% of Americans have participated in clinical research of any kind.

Sound clinical trial design requires randomization, but that takes participants out of the driver's seat — they no longer have final say over their treatment decisions, creating a barrier to recruiting. In 2022, the European Medicines Agency (EMA) provided a qualification opinion allowing the use of AI models to develop predicted control outcomes for Phase 2/3 trials from historical control data, ultimately requiring fewer participants to make this difficult randomization choice.

Beyond the lab work and clinical trials required to get a product to market, there is also so much knowledge work, from R&D managers reporting decisions at key program milestones to medical writers drafting filings for health authorities, quality assurance staff confirming data integrity, and much more. This knowledge work is about translating R&D data into decisions and documentation, and requires answering questions and generating content in the natural language of scientists. Scientific large language models that are fine-tuned versions of popular general-purpose large-language models like GPT, such as BioGPT, or Llama, such as BioMedGPT-LM, have obvious potential.

But a generative pre-trained transformer built for scientific language isn't enough. The real challenge is rethinking how the underlying R&D data are structured and managed. To automate knowledge work, these large-language models need to operate on top of data that comes from the lab, a foundation in which there are many problems today. Large pharma companies often employ hundreds of software applications within R&D labs alone, leading to data silos and a lack of data standardization and interoperability that make it excruciatingly difficult to apply AI effectively. At Benchling, we’re taking the same modern platform approach that has transformed how many businesses digitally manage their sales or financial data and applying it to R&D to make automation of knowledge work a reality.

AI will change how pharma competes

Another key element in the work of biopharma companies needs to be reinvented to make the promise of AI in biotech a reality: how companies compete.

ProFluent Bio, a Berkeley-based biotech, recently open sourced a novel, AI-designed, CRISPR-based, human gene editor. To open source such intellectual property was previously unthinkable. In an era where scientists working with AI can design many more drugs than we could possibly ever bring to market — imagine a world of drug abundance! — the competitive focus will shift away from protecting intellectual property and toward speed to market and creating step changes in the efficiency of clinical trials and regulatory approvals.

This shift will, in turn, help solve the biggest issue in making AI-driven drug discovery even more powerful: access to data. The historic focus on intellectual property has created an industry culture that treats all experimental data as proprietary. Yet the success of AlphaFold2 and AlphaFold3 is entirely predicated on the public availability of protein sequences and experimentally-resolved structures. Progress in developing new foundation models will require data abundance.

Open-source software has long been a tenet of the tech industry, fundamentally altering the nature of collaboration and competition. If the biopharma industry wants to realize the benefits of AI, companies must work together to generate the data needed to power it. Companies are already starting to collaborate on open-source software projects around managing data in areas like molecular modeling, connectivity to lab instruments, and bioinformatics code. Pre-competitive collaboration can extend even further to how AI models themselves are built. Federated learning allows companies to update a shared global model without sharing their underlying datasets with competitors. This approach has already shown significant improvement in AI models for small molecules, and can likely have an even larger impact for large molecules if companies invest in it together.

The era of biotech AI

The era of rational drug design — an atom by atom, computer-aided approach to designing drugs for a specific target — started more than 30 years ago. It had a tremendous impact, leading to breakthroughs for debilitating diseases like cystic fibrosis. We are now entering an era of AI-driven drug discovery, which promises to be AI's greatest contribution to humanity by finding treatments for the thousands of currently untreatable diseases.

If the bulk of biopharma R&D continues to operate as it does today, a treasure trove of new drugs will be created that may never make their way to patients. Applying AI beyond drug discovery and using it to reinvent all elements of R&D will ensure that doesn't happen.

Powering breakthroughs for over 1,300 biotechnology companies, from startups to Fortune 500s